Handcrafted RSSHub Route Failed

A few days ago, I snagged a free .news domain from NameCheap and registered hy2.news. Since I didn’t have much use for it, I decided to set up a personal news feed page. Since it’s a dynamic page, I brought out Wordpress and used an RSS plugin to make it happen. The page is live at: Hyruo News

The page is up, but sourcing the RSS feed became an issue. So, I started by trying to scrape the Nodeseek forum, where I’ve been active recently. After hours of effort, I still failed.

Handcrafting an RSSHub Route

The official RSSHub development tutorial is a bit jumpy, but the main process is as follows.

Preliminary Work

- Clone the RSSHub repository (might take over half an hour if your computer is slow)

git clone https://github.com/DIYgod/RSSHub.git - Install the latest version of Node.js (version must be greater than 22) Node.js Official Site

- Install dependencies

pnpm i - Run

pnpm run dev

Developing the Route

Developing the route is relatively simple. Open the RSSHub\lib\routes directory, create a new folder under it, such as nodeseek, and then add two files namespace.ts and custom.ts in that folder.

- namespace.ts File

This file can be copied from the official tutorial or just copied from another folder in the lib\routes directory and modified. Example:

|

|

- custom.ts File

This is the main file for developing the route. The filename can be named according to the target website’s structure. Just look at other folders to get the idea. The difficulty lies in the specific content. Example:

|

|

Summary of Main Issues

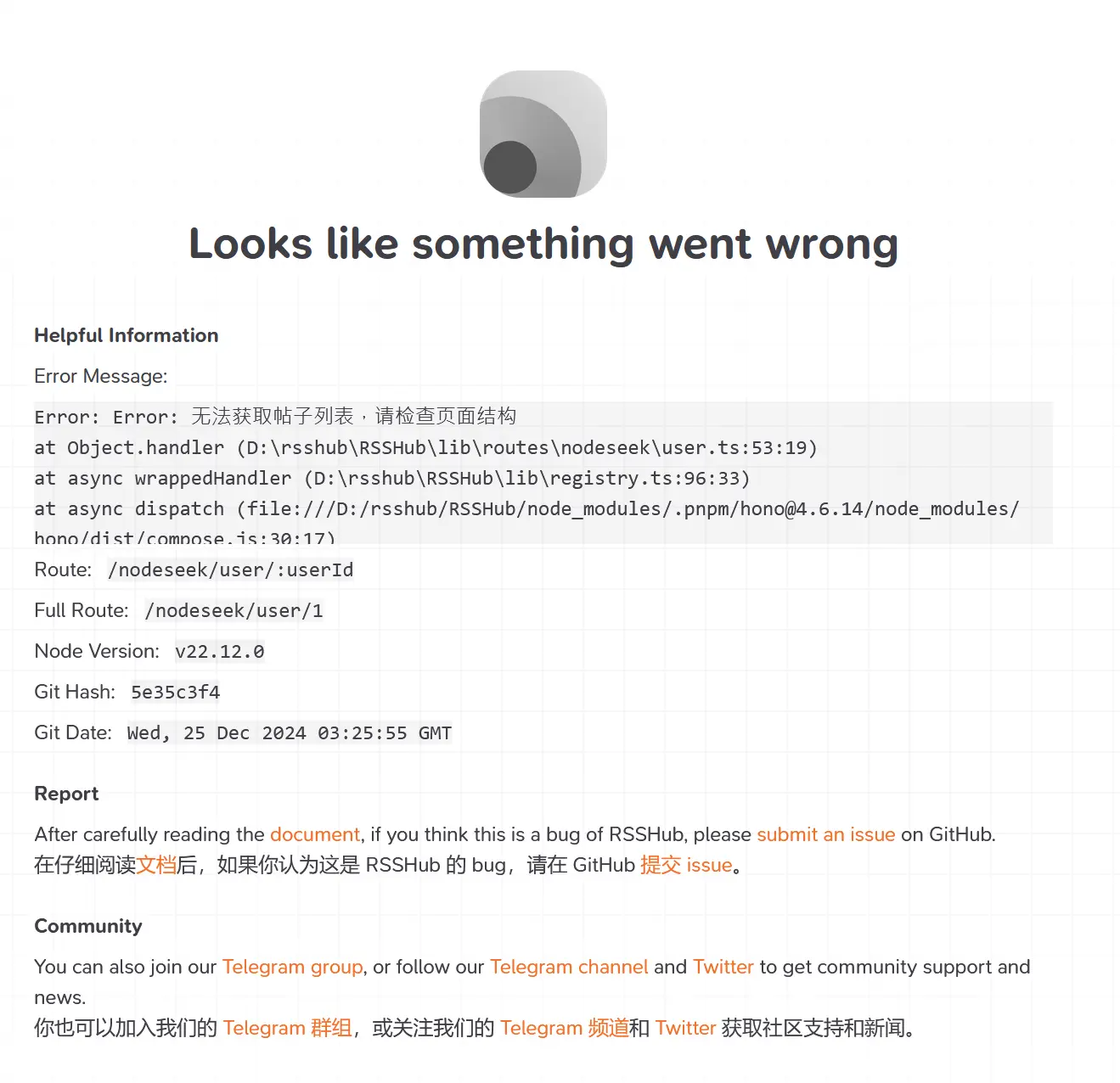

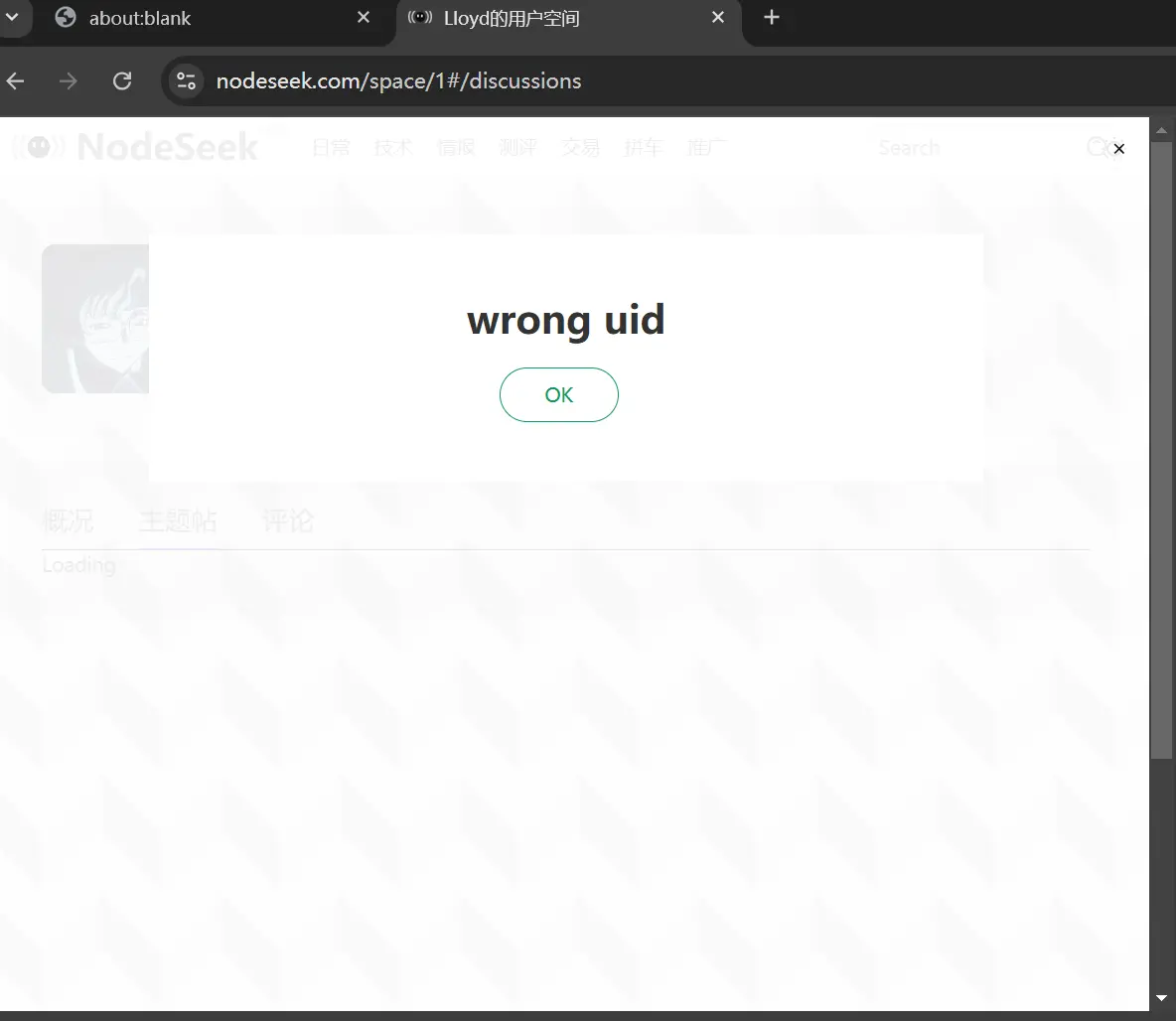

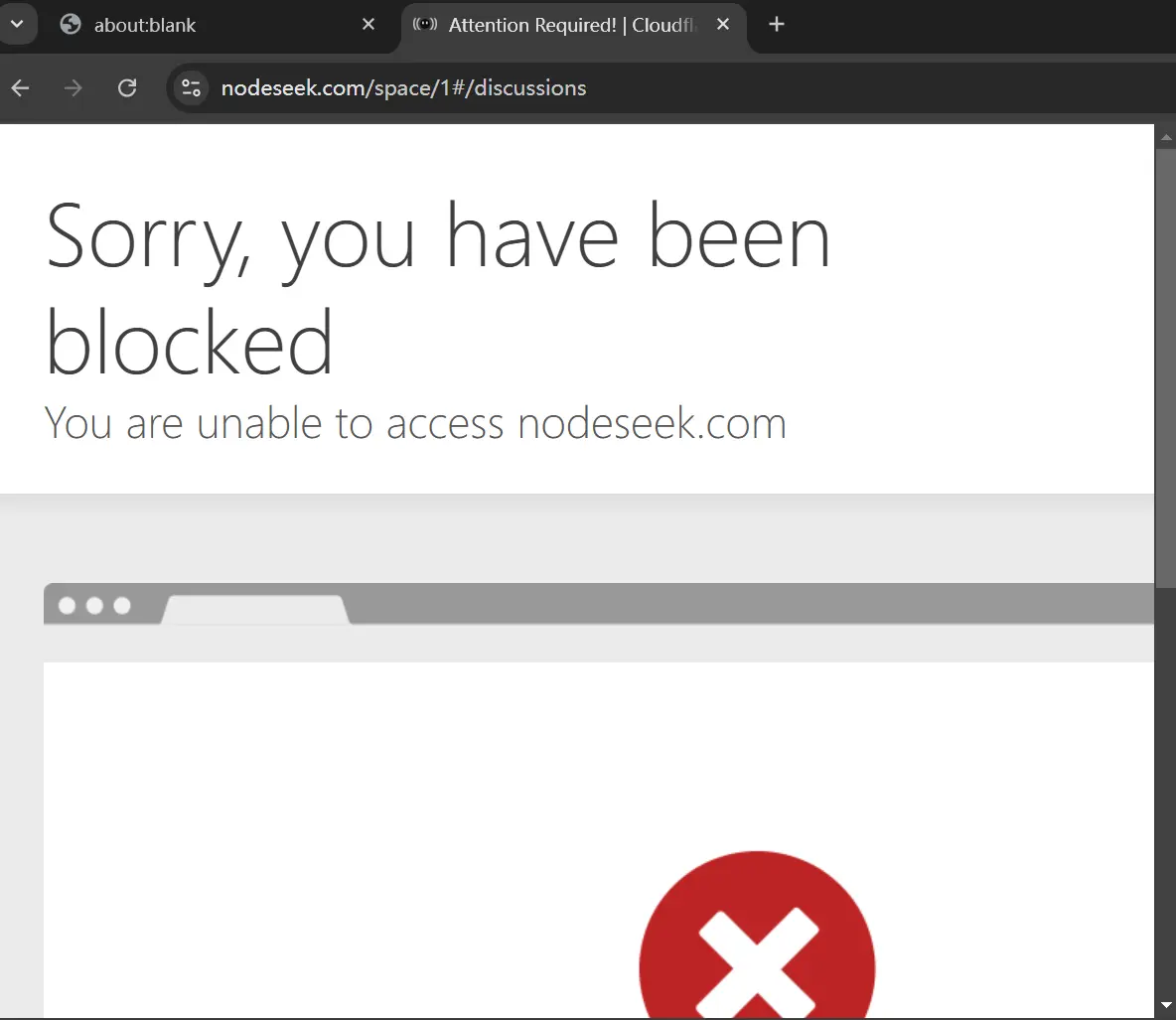

In short, the main reason for the failure in handcrafting the Nodeseek route was the inability to bypass Nodeseek’s anti-crawling measures and Cloudflare’s protection.

The extreme method RSSHub can use is to simulate browser behavior with Puppeteer to counter crawling. However, machine-simulated behavior is easily detected by platforms like Cloudflare.

During local testing, I had about a 50% success rate, and that was with the latest version of Puppeteer. If I used the Puppeteer version from RSSHub’s official dependencies, the success rate was less than 10%. Considering that submitting to RSSHub requires double review, the success rate is just too low.

For now, I have to reluctantly give up.

When It Rains, It Pours

This morning, I woke up to find that the sky had fallen—6 of my free *.US.KG domains were down because the parent domain stopped resolving.

Then, at noon, the sky fell again. The .news domain I had just set up was suspended by the registrar. They sent me an email asking for an explanation of why my personal information changed during the registration process, and then forcibly redirected the NS to an IP address no one recognizes.

Well, that’s my own fault. I messed up during registration.When I directly used the browser’s auto-fill form program, I accidentally filled in all the real information. Then, when I tried to change it back, an error occurred as soon as I made the changes.

PS: In the afternoon, *.us.kg returned to normal. But I feel like I won’t love it anymore.